Defer offscreen images on mobile

Defer offscreen images on mobile

Mobile Image Deferral: the standard

Mobile performance is often held back by network latency (RTT) and main-thread CPU availability. Deferring offscreen images on mobile addresses both by preventing bandwidth contention on the critical rendering path and distributing image decoding costs over the session duration.

This document explains how to effectively defer images on mobile, when to use it and addresses the specific mechanical constraints of mobile viewports.

1. Defer offscreen images on mobile: native lazy loading

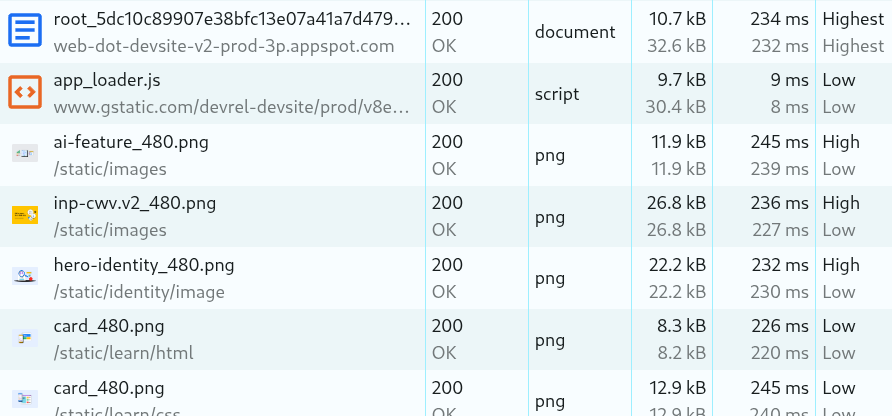

When a browser loads a page, it opens a limited number of parallel connections (depending on a lot of factors but 6 per domain is a common average). If these connections are used for downloading offscreen images (e.g., a footer logo or carousel slide), the download of critical resources (typically the LCP image, important scripts and fonts will compete for slots and bandwidth). This phenomenon, known as Network Contention, directly degrades Core Web Vitals.

By deferring offscreen images using the native loading attribute, we can prioritize important resources and optimize the Critical Rendering Path. The browser fetches only what is immediately visible, reserving bandwidth for the assets that strictly impact the First Contentful Paint (FCP) and the Largest Contentful Paint (LCP). The native lazy loading method offloads this prioritization logic to them much faster browser's internal mechanism, removing the need for old and slow JavaScript libraries.

Implementation

For all images below the initial viewport ("the fold") add the loading="lazy" attribute.

<!-- Standard Deferred Image -->

<img src="product-detail.jpg"

loading="lazy"

alt="Side view of the chassis"

width="800"

height="600"

decoding="async">

How lazy loading works on mobile: The Browser Heuristic

Native lazy loading is superior to JavaScript solutions because the browser adjusts the loading threshold (when an image is triggered for download) based on the Effective Connection Type (ECT).

- On 4G/WiFi: The Blink engine (Chrome/Edge) employs a conservative threshold (e.g., 1250px). It assumes low latency and fetches the image only when the user is relatively close to the viewport.

- On 3G/Slow-2G: The threshold expands (e.g., 2500px). The browser initiates the request much earlier relative to the scroll position to compensate for high round-trip times, ensuring the image is ready before the user scrolls it into view.

Critical Exception: The LCP Candidate

A common performance regression occurs when developers apply loading="lazy" to the Largest Contentful Paint (LCP) element (typically the hero image). This delays the fetch until layout is complete.

Correct LCP Strategy: The LCP image must be eager-loaded and prioritized.

<!-- Hero Image: Eager and Prioritized -->

<img src="hero.jpg"

alt="Summer Collection"

width="1200"

height="800"

loading="eager"

fetchpriority="high"> 2. Mobile complexities: Viewport and Touch

Mobile viewports introduce specific rendering challenges that native implementation handles more robustly than script-based solutions.

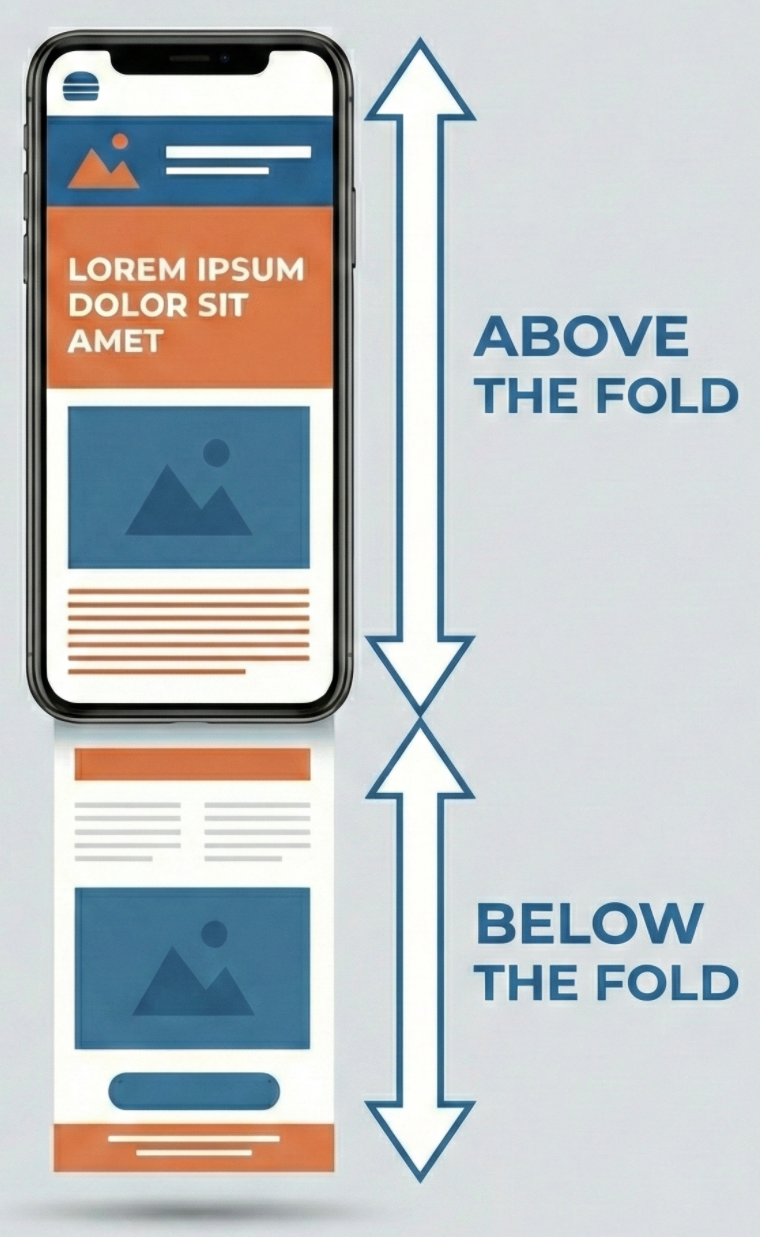

- The Viewport: The visible rectangular area of the browser window. On mobile, this is dynamic; it changes dimensions based on device orientation (portrait vs. landscape) and the state of the browser chrome (URL bars retracting).

- The Fold: The exact bottom edge of the viewport. It is the threshold that separates visible content from off-screen content.

- Above the Fold: Any content visible immediately upon page load without scrolling. Images here are often critical and should almost never be lazy-loaded.

- Below the Fold: Any content located vertically past the fold. This content is Non-Critical and must be deferred until the user scrolls near it.

The Dynamic Viewport

On mobile browsers, the viewport height (vh) is fluid. As the user initiates a touch scroll, the URL bar and navigation controls often retract, changing the visible area size.

JavaScript based image deferral libraries usually calculate viewport height (window.innerHeight) only once at the start of the page load. When mobile browsers dynamically resize the visible area by hiding the URL bar during a scroll Java Script methods still use continue to use the old, smaller height value. This caused images to remain unloaded even when they physically entered the expanded viewport area causing poor UX for the visitors..

Native Handling fixes this problem since the browser's internal layout engine tracks the visual viewport automatically, ensuring triggers fire regardless of any viewport size changes.

3. Mobile Image Decoding and CPU Throttling

Mobile devices have limited CPU and image decoding on mobile can be relatively slow and expensive. Converting a JPEG into a bitmap requires many CPU cycles. On a mobile processor, decoding a sequence of larger images can block the main thread for 50ms–100ms each, causing input latency.

The Fix: content-visibility

To solve this, we can use the CSS property and value content-visibility: auto. This property acts as a standard for "Lazy Rendering." It instructs the browser to bypass the layout and painting phases for off-screen elements entirely. The element exists in the DOM, but it does not exist in the Render Tree until it approaches the viewport.

Because this optimization works by skipping the rendering of an element's subtree, you cannot apply it directly to an <img> tag (which has no subtree). You should apply conten-visibility to the product container or image card that hosts these images and it's content

@media (max-width: 768px) {

.image-card, .product-card {

/* Skip rendering of the container and its children */

content-visibility: auto;

/* Essential: Prevents container from collapsing to 0px height */

contain-intrinsic-size: auto 300px;

}

}

This ensures that even if an image is downloaded, the browser does not pay the layout/paint cost until the user actually scrolls to it.

4. Legacy Methodologies: Why to avoid them

Before native support, developers relied on JavaScript for mobile image deferral. These methods are still widely used but should be considered as technical debt!

The "Scroll Handler" Era (2010–2016)

Early implementations attached event listeners to the scroll event.

// OBSOLETE: Do not use

window.addEventListener('scroll', () => {

images.forEach(img => {

if (img.getBoundingClientRect().top < window.innerHeight) {

img.src = img.dataset.src;

}

});

});

Main Thread Blocking: The scroll event fires dozens of times per second. Executing logic and calculating layout (getBoundingClientRect) during active scrolling caused frame drops (jank).

Layout Thrashing: Querying geometric properties forces the browser to synchronously recalculate the layout style, a computationally expensive operation on mobile CPUs.

The IntersectionObserver Era (2016–2019)

The IntersectionObserver API improved performance by asynchronously observing changes in element visibility.

// DEPRECATED: Use native loading where possible

const observer = new IntersectionObserver((entries) => {

entries.forEach(entry => {

if (entry.isIntersecting) {

const img = entry.target;

img.src = img.dataset.src;

observer.unobserve(img);

}

});

});

Script Dependency: It requires JavaScript execution. If the main thread is busy hydrating a framework (React/Vue), the images remain unloaded even if they are in the viewport.

Lack of Network Awareness: Unlike native loading, IntersectionObserver uses fixed margins (e.g., rootMargin: '200px'). It does not automatically expand its buffer on slow networks, leading to "white flashes" for users on poor connections.

Performance degrades unless you guard it.

I do not just fix the metrics. I set up the monitoring, the budgets, and the processes so your team keeps them green after I leave.

Start the Engagement